|

Extended Residual Learning with One-shot Imitation Learning for Robotic Assembly in Semi-structured Environment

Chuang Wang , Chupeng Su , Bozheng Sun , Gang Chen* and Longhan Xie*

Frontiers in Neurorobotics,2024

PDF

Abstract

BibTeX

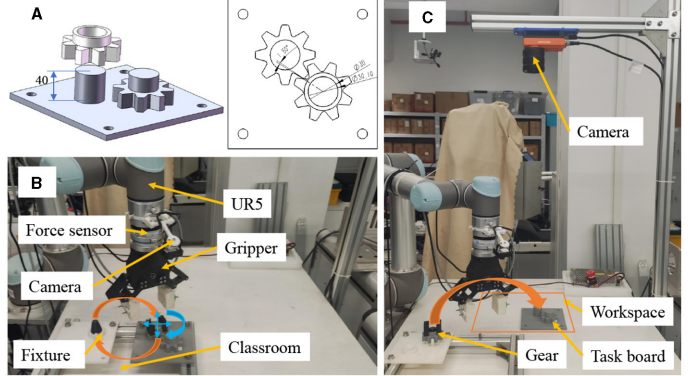

Robotic assembly tasks require precise manipulation and coordination, often necessitating advanced learning techniques to achieve efficient and effective performance. While residual reinforcement learning with a base policy has shown promise in this domain, existing base policy approaches often rely on hand-designed full-state features and policies or extensive demonstrations, limiting their applicability in semi-structured environments. In this study, we propose an innovative Object-Embodiment-Centric Imitation and Residual Reinforcement Learning (OEC-IRRL) approach that leverages an object-embodiment-centric (OEC) task representation to integrate vision models with imitation and residual learning. By utilizing a single demonstration and minimizing interactions with the environment, our method aims to enhance learning efficiency and effectiveness. The proposed method involves three key steps: creating an object-embodiment-centric task representation, employing imitation learning for a base policy using via-point movement primitives for generalization to different settings, and utilizing residual RL for uncertainty-aware policy refinement during the assembly phase. Through a series of comprehensive experiments, we investigate the impact of the OEC task representation on base and residual policy learning and demonstrate the effectiveness of the method in semi-structured environments. Our results indicate that the approach, requiring only a single demonstration and less than 1.2 hours of interaction, improves success rates by 46% and reduces assembly time by 25%. This research presents a promising avenue for robotic assembly tasks, providing a viable solution without the need for specialized expertise or custom fixtures.

@article{wang2024task,

title={Task attention-based multimodal fusion and curriculum residual learning for context generalization in robotic assembly},

author={Wang, Chuang and Lin, Ze and Liu, Biao and Su, Chupeng and Chen, Gang and Xie, Longhan},

journal={Applied Intelligence},

pages={1--23},

year={2024},

publisher={Springer}

}

|

|

Task attention-based multimodal fusion and curriculum residual learning for context generalization in robotic assembly

Chuang Wang, Ze Lin, Biao Liu, Chupeng Su, Gang Chen*, Longhan Xie*

Applied Intelligence,2024

PDF

Abstract

BibTeX

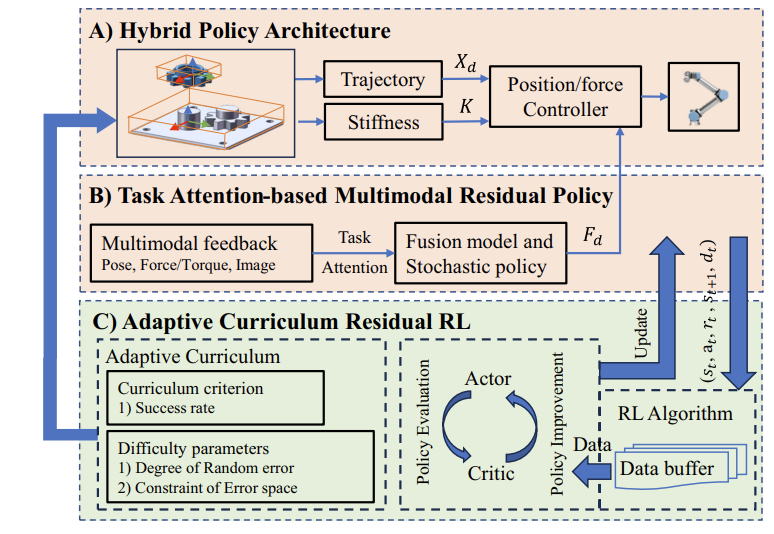

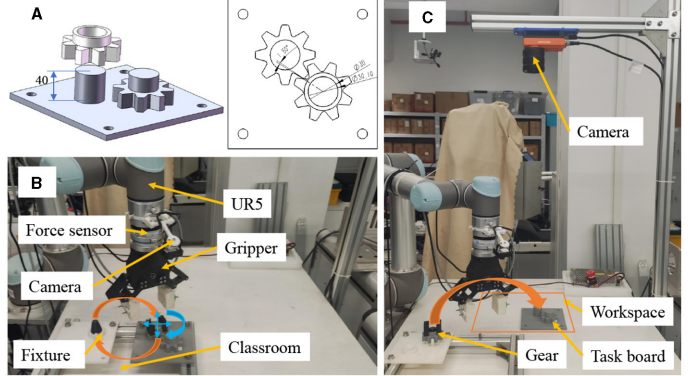

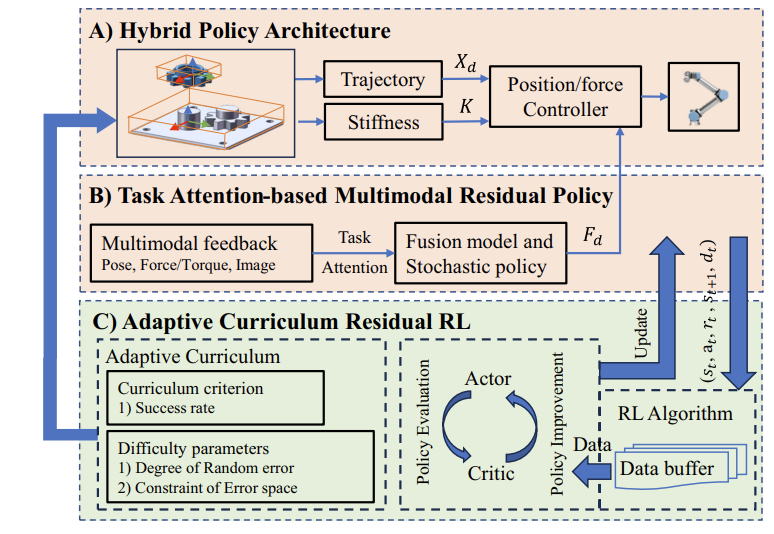

In the domain of flexible manufacturing, Deep Reinforcement Learning (DRL) has emerged as a pivotal technology for robotic assembly tasks. Despite advancements in sample efficiency and interaction safety through residual reinforcement learning with initial policies, challenges persist in achieving context generalization amidst stochastic systems characterized by large random errors and variable backgrounds. Addressing these challenges, this study introduces a novel framework that integrates task attention-based multimodal fusion with an adaptive error curriculum within a residual reinforcement learning paradigm. Our approach commences with the formulation of a task attention-based multimodal policy that synergizes task-centric visual, relative pose, and tactile data into a compact, end-to-end model. This model is explicitly designed to enhance context generalization by improving observability, thereby ensuring robustness against stochastic errors and variable backgrounds. The second facet of our framework, curriculum residual learning, introduces an adaptive error curriculum that intelligently modulates the guidance and constraints of a model-based feedback controller. This progression from perfect to significantly imperfect initial policies incrementally enhances policy robustness and learning process stability. Empirical validation demonstrates the capability of our method to efficiently acquire a high-precision policy for assembly tasks with clearances as tight as 0.1 mm and error margins up to 20 mm within a 3.5-hour training window-a feat challenging for existing RL-based methods. The results indicate a substantial reduction in average completion time by 75

and a 34 increase in success rate over the classical two-step approach. An ablation study was conducted to assess the contribution of each component within our framework. Real-world task experiments further corroborate the robustness and generalization of our method, achieving over a 90

success rate in variable contexts..

@article{wang2024task,

title={Task attention-based multimodal fusion and curriculum residual learning for context generalization in robotic assembly},

author={Wang, Chuang and Lin, Ze and Liu, Biao and Su, Chupeng and Chen, Gang and Xie, Longhan},

journal={Applied Intelligence},

pages={1--23},

year={2024},

publisher={Springer}

}

|